We are going to explore key patterns for interacting with Kubernetes resources using client SDKs, focusing on best practices for scalability, efficiency, and maintainability. Each pattern’s use cases, pros, and cons are explained with examples in Go.

Direct api

The direct API client uses Kubernetes’ client-go package to interact with the API server for CRUD operations.

package main

import (

"context"

"flag"

"fmt"

corev1 "k8s.io/api/core/v1"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

"k8s.io/client-go/kubernetes"

"k8s.io/client-go/tools/clientcmd"

)

func main() {

kubeconfig := flag.String("kubeconfig", "/path/to/kubeconfig", "kubeconfig file")

flag.Parse()

config, err := clientcmd.BuildConfigFromFlags("", *kubeconfig)

if err != nil {

panic(err)

}

clientset, err := kubernetes.NewForConfig(config)

if err != nil {

panic(err)

}

pods, err := clientset.CoreV1().Pods("default").List(context.TODO(), metav1.ListOptions{})

if err != nil {

panic(err)

}

for _, pod := range pods.Items {

fmt.Printf("Pod Name: %s\n", pod.Name)

}

}

Purpose

- Raw API access to Kubernetes resources.

Pros

- Simple, direct, no additional setup.

Cons

- No caching, each call hits API server, can cause performance issues.

Use Case

- One-off operations, simple scripts.

Informers

Informers watch resources and cache their state locally, allowing efficient read operations.

package main

import (

"fmt"

"time"

"k8s.io/client-go/informers"

"k8s.io/client-go/kubernetes"

"k8s.io/client-go/tools/clientcmd"

)

func main() {

config, err := clientcmd.BuildConfigFromFlags("", "/path/to/kubeconfig")

if err != nil {

panic(err)

}

clientset, err := kubernetes.NewForConfig(config)

if err != nil {

panic(err)

}

factory := informers.NewSharedInformerFactory(clientset, time.Minute)

podInformer := factory.Core().V1().Pods().Informer()

podInformer.AddEventHandler(

informers.ResourceEventHandlerFuncs{

AddFunc: func(obj interface{}) {

fmt.Println("Pod added")

},

UpdateFunc: func(oldObj, newObj interface{}) {

fmt.Println("Pod updated")

},

DeleteFunc: func(obj interface{}) {

fmt.Println("Pod deleted")

},

},

)

stopCh := make(chan struct{})

defer close(stopCh)

factory.Start(stopCh)

select {}

}

Purpose

- Watch and cache resources efficiently.

Pros

- Efficient caching, real-time updates.

- Maintains local cache of resources.

- Use Kubernetes watch API.

- Provides event notifications (Add/Update/Delete).

- Handles reconnection automatically.

Cons

- Slightly delayed cache synchronization; more complex to set up.

Use Case

- Controllers that need to react to resource changes.

Listers

Listers are built on top of informers and allow read access to the local cache.

package main

import (

"fmt"

"time"

"k8s.io/client-go/informers"

"k8s.io/client-go/kubernetes"

"k8s.io/client-go/tools/clientcmd"

)

func main() {

config, err := clientcmd.BuildConfigFromFlags("", "/path/to/kubeconfig")

if err != nil {

panic(err)

}

clientset, err := kubernetes.NewForConfig(config)

if err != nil {

panic(err)

}

factory := informers.NewSharedInformerFactory(clientset, time.Minute)

podLister := factory.Core().V1().Pods().Lister()

stopCh := make(chan struct{})

defer close(stopCh)

factory.Start(stopCh)

factory.WaitForCacheSync(stopCh)

pods, err := podLister.Pods("default").List(labels.Everything())

if err != nil {

panic(err)

}

for _, pod := range pods {

fmt.Printf("Pod Name: %s\n", pod.Name)

}

}

Purpose

- Read-only access to cached resources.

Pros

- Query efficiency with cached data.

- Use informer’s cache.

- No API server calls.

- Fast reads.

Cons

- Cache consistency issues in highly dynamic environments.

Use Case

- When you need frequent read access to resources.

Work Queues

Work queues decouple resource change detection from processing logic.

package main

import (

"fmt"

"time"

"k8s.io/client-go/util/workqueue"

)

func main() {

queue := workqueue.NewNamedRateLimitingQueue(workqueue.DefaultControllerRateLimiter(), "PodsQueue")

queue.Add("pod1")

queue.Add("pod2")

// Process items

for queue.Len() > 0 {

item, shutdown := queue.Get()

if shutdown {

break

}

fmt.Printf("Processing: %s\n", item)

queue.Done(item)

}

}

Purpose

- Queue-based processing of resources.

Pros

- Decouples detection from processing; supports retries.

- Rate limiting.

- Retry with backoff.

- Ordered processing.

Cons

- Adds operational complexity; manual rate-limiting management required.

Use Case

- Controllers that need to process resources in order with retries.

Indexers

Indexers improve data retrieval efficiency by organizing cached resources.

package main

import (

"fmt"

"k8s.io/client-go/tools/cache"

)

func main() {

indexer := cache.NewIndexer(cache.MetaNamespaceKeyFunc, cache.Indexers{

"namespace": func(obj interface{}) ([]string, error) {

return []string{"default"}, nil

},

})

pod1 := &corev1.Pod{ObjectMeta: metav1.ObjectMeta{Name: "pod1", Namespace: "default"}}

pod2 := &corev1.Pod{ObjectMeta: metav1.ObjectMeta{Name: "pod2", Namespace: "default"}}

indexer.Add(pod1)

indexer.Add(pod2)

pods, err := indexer.ByIndex("namespace", "default")

if err != nil {

panic(err)

}

for _, pod := range pods {

fmt.Printf("Indexed Pod: %s\n", pod.(*corev1.Pod).Name)

}

}

Purpose

- Fast lookups based on custom criteria.

Pros

- Speeds up retrieval for filtered queries.

- Custom indexes on cached data.

- Fast retrieval by indexed fields.

Cons

- Higher memory usage for large datasets.

Use Case

- When you need to frequently look up resources by non-standard fields.

Combining patterns for efficiency

Here’s how to combine these patterns:

1. Informers + Listers: Use informers to cache resources and listers to query them. Example: Controllers managing reconciliation logic.

2. Informers + Work Queues: Use informers to detect changes and enqueue events for processing. Ideal for ensuring reliable processing.

3. Informers + Indexers: Cache resources and optimize queries with indexers for specific use cases.

4. Direct API Client + Informers: Direct API client for setup and informers for ongoing monitoring.

package main

import (

"fmt"

"time"

corev1 "k8s.io/api/core/v1"

"k8s.io/client-go/informers"

"k8s.io/client-go/kubernetes"

"k8s.io/client-go/tools/cache"

"k8s.io/client-go/tools/clientcmd"

"k8s.io/client-go/util/workqueue"

)

func main() {

config, err := clientcmd.BuildConfigFromFlags("", "/path/to/kubeconfig")

if err != nil {

panic(err)

}

clientset, err := kubernetes.NewForConfig(config)

if err != nil {

panic(err)

}

factory := informers.NewSharedInformerFactory(clientset, time.Minute)

podInformer := factory.Core().V1().Pods().Informer()

queue := workqueue.NewNamedRateLimitingQueue(workqueue.DefaultControllerRateLimiter(), "PodQueue")

podInformer.AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: func(obj interface{}) {

queue.Add(obj)

},

})

stopCh := make(chan struct{})

defer close(stopCh)

factory.Start(stopCh)

go func() {

for {

item, shutdown := queue.Get()

if shutdown {

break

}

// Simulate processing

pod := item.(*corev1.Pod)

fmt.Printf("Processing Pod: %s\n", pod.Name)

// Mark as done

queue.Done(item)

}

}()

<-stopCh

}

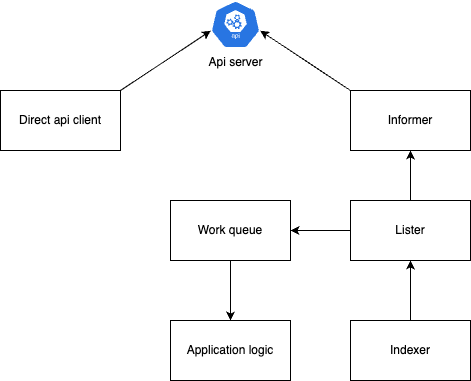

Client architecture for combined pattern

- Kubernetes API Server: Central hub for all resource interactions.

- Direct API Client: Directly interacts with the API server for CRUD operations.

- Informer: Watches the API server and maintains a local cache.

- Lister: Queries the Informer’s local cache for optimized reads.

- Work Queue: Decouples event detection from processing.

- Indexer: Organizes cached data for fast lookup.

- Application Logic: Consumes events or resources and implements business logic.

Why these patterns matters?

Scalability

- Reduces API server load.

- Efficient caching.

- Rate limiting prevents overwhelming the system.

Reliability

- Automatic reconnection.

- Retry mechanisms.

- Event ordering.

Performance

- Local caching.

- Indexed lookups.

- Batch processing.

Consistency

- Watch-based updates.

- Event ordering.

- Optimistic concurrency.